simple_regression_data <- vroom("http://nfl-book.bradcongelio.com/simple-reg")5 Advanced Model Creation with NFL Data

5.1 Introduction to Statistical Modeling with NFL Data

The process of conducting statistical modeling with NFL data is difficult to cover in a single chapter of a book, as the topic realistically deserves an entire book as the field of modeling and machine learning is vast and complex, and continues to grow as a result of access to new data and the releases of packages (as of November 2020, over 16,000 packages are available in the R ecosystem).(Wikipedia, 2023) The list of options for conducting statistical analysis and machine learning is vast, with the more widely-used ones including caret (combined functions for creating predictive models), mgcv (for generalized additive models), lme4 (for linear mixed effects models), randomForest (for creating random forests models), torch (for image recognition, time series forecasting, and audio processing), and glmnet (for lasso and elastic-net regression methods). A newer addition to the modeling and machine learning ecosystem is tidymodels, which is a collection of various packages that operate cohesively using tidyverse principles.

While it is not possible for this chapter to cover every aspect of modeling and machine learning, its goal is to provide the foundation in both to provide you with the necessary skills, understanding, and knowledge to independently explore and analyze NFL data using different methodologies and tools. The techniques covered in this chapter, from creating a simple linear regression to a predictive XGBoost model, are building blocks to all further analysis and machine learning you will conduct. In doing so, you will learn how to use built-in options such as stats to more technical packages such as tidymodels.

To that end, by mastering these introductory concepts and techniques, you not only learn the process and coding needed to create models or do machine learning, but you will begin developing an analytical mindset which will allow you to understand why one statistical approach is not correct for your data and which one is, how to interpret the results of your model, and - when necessary - how to correct issues and problems in the process as they arise. The fundamentals provided in this chapter, I believe, serve as a actionable stepping stone for a further journey into the modeling and machine learning in the R programming language, with or without the use of NFL data.

5.2 Regression Models with NFL Data

Regression models are the bedrock of many more advanced machine learning techniques. For example, the “decision tree” process that is conducted in random forests models and XGBoost are created based on the split in variable relationships and neural networks are, at their core, just a intricate web of regression-like calculations. Because of this, regression models are an outstanding starting point for not only learning the basics of modeling and machine learning in R, but an important step in comprehending more advanced modeling techniques.

As you will see, a regression model is simply a mathematical determination of the relationship between two or more variables (the response and dependent variables). In the context of the NFL, a team’s average points per game would be a response variable while those metrics that cause the average points per game are the predictor variables (average passing and rushing yards, turnovers, defensive yards allowed, etc.). The results of regression models allow us to determine which predictor variables have the largest impact on the response variable. Are passing yards a more predictive variable to average points per game than rushing yards? A regression model is capable of answering that question.

This section starts with exploring simple linear regression and then extends this knowledge to creating both multiple linear and logistic regression models which deal, respectively, with multiple predictors and categorical dependent variables. After, we will focus on creating binary and multinomial regression models.

5.2.1 Simple Linear Regression

A linear regression is a fundamental statistical technique that is used to explore the relationship between two variables - the dependent variable (also called the “response variable”) and the independent variables (also called the “predictor variables”). By using a simple linear regression, we can model the relationship between the two variables as a linear equation that best fits the observed data points.

A simple linear regression aims to fit a straight line through all the observed data points in such a way that the total squared distance between the actual observations and the values predicted by the model are minimal. This line is often referred to as either the “line of best fit” or the “regression line” and it represents the interaction between the dependent and independent variables. Mathematically, the equation for a simple linear regression is as follows:

\[ Y = {\beta}_0 + {\beta}_1 X + \epsilon \]

- \(Y\), in the above equation, is the dependent variable where the \(X\) represents the independent variable.

- \({\beta}_o\) is the intercept of the regression model.

- \({\beta}_1\) is the slope of the model’s “line of best fit.”

- \(\epsilon\) represents the error term.

To better illustrate this, let’s use basic football terms using the above regression equation to compare a team’s offensive points scored in a season based on how many offensive yards it accumulated. The intercept (\({\beta}_o\)) represents the value when a team’s points scored and offensive yards are both zero. The slope (\({\beta}_1\)) represents the rate of change in \(Y\) as the unit of \(X\) changes. The error term (\(\epsilon\)) is represents the difference between the actual observed values of the regression’s dependent variable and the value as predicted by the model.

Using our points scored/total yards example, a team’s total yards gained is the independent variable and total points scored is the dependent variable, as a team’s total yardage is what drives the change in total points (in other words, a team’s total points is dependent on its total yardage). A team will not score points in the NFL if it is not also gaining yardage. We can view this relationship by building a simple linear regression model in R using the lm() function.

The lm() function is a built-in RStudio tool as part of the stats package and stand for “linear model.” It is used, as described above, to fit a linear regression to the data that you provide. The completed regression estimates the coefficients of the data and also includes both the intercept and slope, which are the main factors in explaining the relationship between the response and predictor variables.

The lm() function requires just two argument in order to provide results: the formula and the data frame to use:

model_results <- lm(formula, data)

The formula argument requires that you specify both the response and predictor variables, as named in your data frame, in the structure of y ~ x wherein y is the response variable and x is the predictor. In the case that you have more than one predictor variable, the + is used to add to the formula:

model_results <- lm(y ~ x1 + x2 + x3, data)

The returned coefficients, residuals, and other statistical results of the model are returned into your RStudio environment in a lm data object. There are several ways to access this data and are discussed below in further detail.

To put theory into action, let’s build a simple linear regression model that explores the relationship between the total yardage earned by a team over the course of a season and the number of points scored. To begin, gather the prepared information into a data frame titled simple_regression_data by running the code below.

The data contains the total yardage and points scored for each NFL team between the 2012 and 2022 seasons (not including the playoffs). Before running our first linear regression, let’s first begin by selecting just the 2022 data and create a basic visualization to examine the baseline relationship between the two variables.

regression_2022 <- simple_regression_data %>%

filter(season == 2022)

teams <- nflreadr::load_teams(current = TRUE)

regression_2022 <- regression_2022 %>%

left_join(teams, by = c("team" = "team_abbr"))

ggplot(regression_2022, aes(x = total_yards, y = total_points)) +

geom_smooth(method = "lm", se = FALSE,

color = "black",

linetype = "dashed",

size = .8) +

geom_image(aes(image = team_logo_wikipedia), asp = 16/9) +

scale_x_continuous(breaks = scales::pretty_breaks(),

labels = scales::comma_format()) +

scale_y_continuous(breaks = scales::pretty_breaks()) +

labs(title = "**Line of Best Fit: 2022 Season**",

subtitle = "*Y = total_yards ~ total_points*") +

xlab("Total Yards") +

ylab("Total Points") +

nfl_analytics_theme()

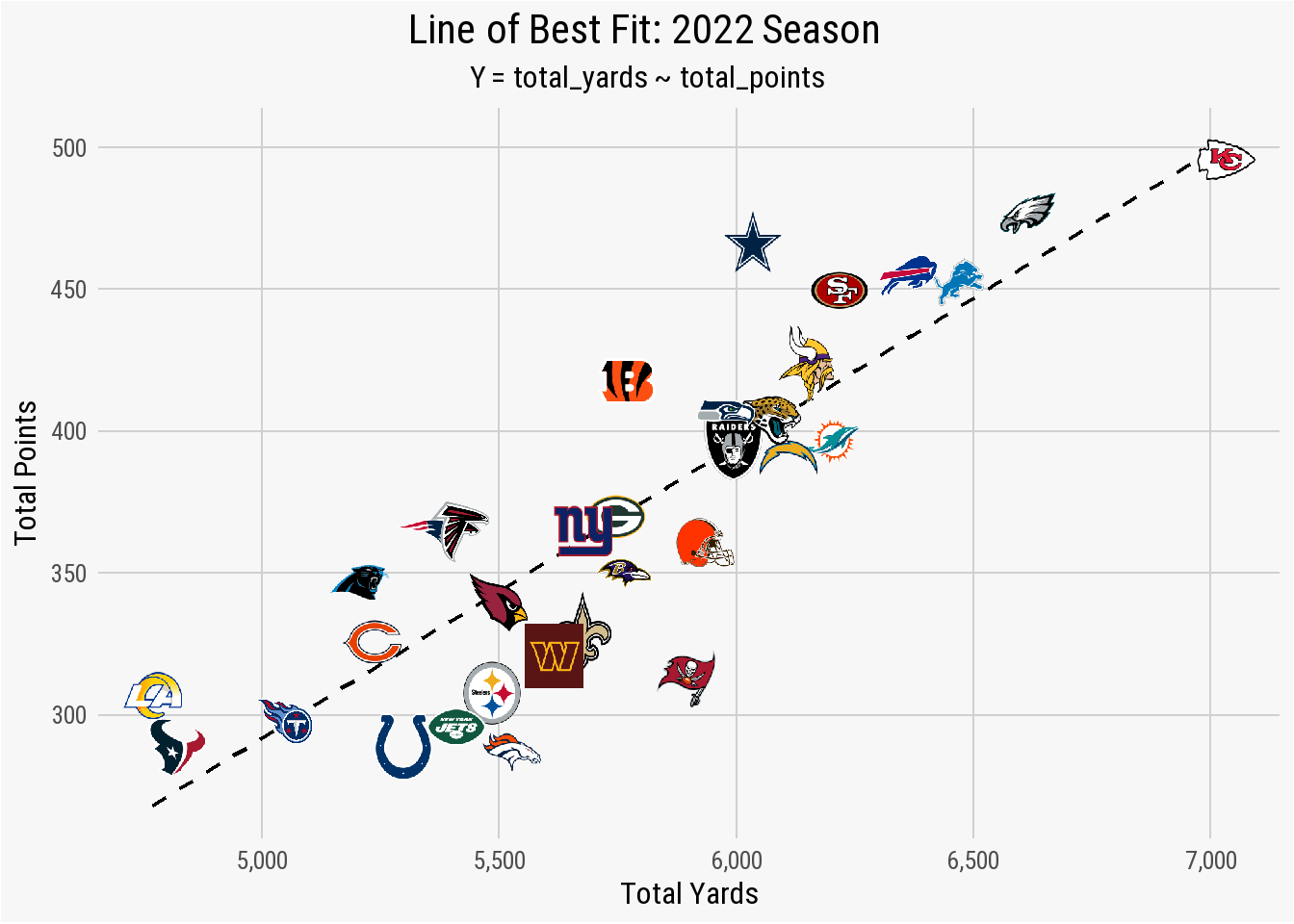

The plot shows that a regression between total_yards and total_points results in several teams - the Titans, Giants, Packers, Raiders, Jaguars, and Chiefs - being fitted nearly perfectly with the line of best fit. These teams scored points based on total yards in a linear fashion. The Cowboys, however, are well above the regression line. This indicates that Dallas scored more total points than what the relationship between total_yards and total_points found as “normal” for a team that earned just a hair over 6,000 total yards. The opposite is true for the Colts, Jets, and Denver. In each case, the total_points scored is below what is expected for teams that gained approximately 5,500 total yards.

The line of best fit can explain this relationship in slightly more detail. For example, the total_yards value of 5,500 cross the regression line just below the total_points value of 350. This means that a team that gains a total of 5,500 yards should - based on this fit - score just under 350 points during the season. Viewing it the other way, if you want your team to score 450 points during the upcoming season, you will need the offensive unit to gain roughly 6,500 total yards.

To further examine this relationship, we can pass the data into a simple linear regression model to start exploring the summary statistics.

results_2022 <- lm(total_points ~ total_yards,

data = regression_2022)Using the lm() function, the \(Y\) variable (the dependent) is total_yards and the \(X\) variable (the predictor) is entered as total_yards with the argument that the data is coming from the regression_2022 data frame. We can view the results of the regression model by using the summary() function.

summary(results_2022)

Call:

lm(formula = total_points ~ total_yards, data = regression_2022)

Residuals:

Min 1Q Median 3Q Max

-71.44 -22.33 1.16 19.15 68.08

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -225.0352 65.4493 -3.44 0.0017 **

total_yards 0.1034 0.0113 9.14 3.6e-10 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 32 on 30 degrees of freedom

Multiple R-squared: 0.736, Adjusted R-squared: 0.727

F-statistic: 83.5 on 1 and 30 DF, p-value: 3.6e-10You have now run and output the summary statistics for your first linear regression model that explore the relationship between an NFL team’s total yards and total points over the course of the 2022 season.

But what do they mean?

The summary() output of any regression models contains two core components: the residuals and the coefficients.

Residuals

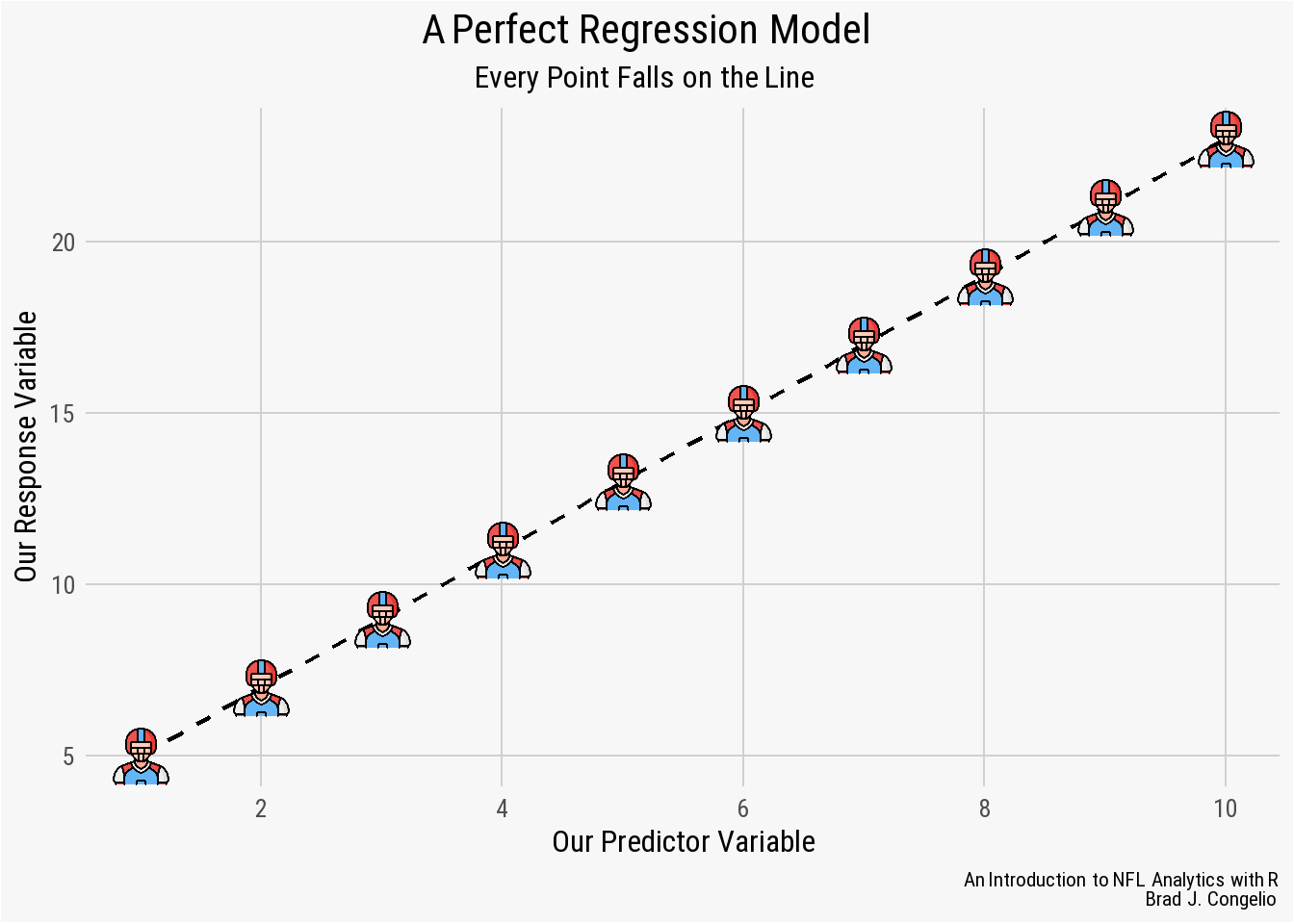

A model’s residuals are the calculated difference between the actual values of the predictor variables as found in the data and the values predicted by the regression model. In a perfect uniform relationship, all of the values in the data frame would sit perfectly on the line of best fit. Take the below graph, for example.

example_fit <- tibble(

x = 1:10,

y = 2 * x + 3)

example_perfect_fit <- lm(y ~ x, data = example_fit)

ggplot(example_perfect_fit, aes(x = x, y = y)) +

geom_smooth(method = "lm", se = FALSE, color = "black",

size = .8, linetype = "dashed") +

geom_image(image = "./images/football-tip.png", asp = 16/9) +

scale_x_continuous(breaks = scales::pretty_breaks()) +

scale_y_continuous(breaks = scales::pretty_breaks()) +

labs(title = "A Perfect Regression Model",

subtitle = "Every Point Falls on the Line",

caption = "*An Introduction to NFL Analytics with R*<br>

**Brad J. Congelio**") +

xlab("Our Predictor Variable") +

ylab("Our Response Variable") +

nfl_analytics_theme()

In this example, the regression model was able to successfully “capture” the entirety of the relationship between the predictor variable on the x-axis and the response variable on the y-axis. This means that the model leaves no unexplained or undetermined variance between the variables. As a result, we could provide the model new, unseen data and the result would predict - with 100% accuracy - the resulting values of the response variable.

However, it is rare to have real-world data be perfectly situated on the line of best fit. In fact, it is more often than not a sign of “overfitting,” which occurs when the model successfully discovers the “random noise” in the data. In such cases, a model with a “perfect line of fit” will perform incredibly poorly when introduced to unseen data.

A regression model that is not overfitted will have data points that do not fall on the line of best fit, but fall over and under it. The results of the regression model uses a simple formula to help us interpret the difference between those actual and estimated values:

residuals = observed_value - predicted_value

Information about these residual values are included first in our summary(results_2022) output and provide insight into the distribution of the model’s residual errors.

| Summary Distribution | Meaning |

The Min Distribution |

The Min distribution provides the smallest difference between the actual values of the model’s predictor variable (total points) and the predicted. In the example summary, the minimum residual is -71.443 which means that the lm() model predicted that one specific team scored 71.443 more points than it actually did.

|

The 1Q Distribution |

The 1Q distribution is based on the first quartile of the data (or where the first 25% of the model’s residual fall on the line of best fit). The 1Q residual is -22.334, which means the lm() model predicted that 25% of the teams scored 22.334 more points than the actual values.

|

The Median Distribution |

The Median distribution is the residuals from the 50th percentile of the data. The Median residual in the above summary is 1.157, which means that the lm() model - for 50% of teams - either overestimated or underestimated a teams total points by less than 1.157 points.

|

The 3Q Distribution |

Covering the third quartile of the residuals, the 3Q Distribution is 19.145 which means that 75% of the NFL teams in the data had a total points prediction either overestimated or underestimated by less than 19.145 points.

|

The Max Distribution |

The opposite of the Min distribution, the Max distribution is the largest difference between the model’s observed and predicted values for a team’s total points. In this case, for one specific team, the model predicted the team scored 68.080 points less than the actual value.

|

A model’s residuals allow you to quickly get a broad view of accurately it is predicting the data. Ideally, a well-performing model will return residuals that are small and distributed evenly around zero. In such cases, it is good first sign that the predictions are close to the actual value in the data and the model is not producing over or under estimates.

But that is not always the case.

For example, we can compare our above example residuals to the residuals produced by manually created data.

Min. 1st Qu. Median Mean 3rd Qu. Max.

-13.70 -2.18 -0.60 0.00 4.16 10.54 Compared to the residuals from the above NFL data, the residuals from the randomly created data are small in comparison and are more evenly distributed around zero. Given that, it is likely that the linear model is doing a good job at making predictions that are close to the actual value.

But that does not mean the residuals from the NFL data indicate a flawed and unreliable model.

It needs to be noted that the “goodness” of any specific linear regression model is wholly dependent on both the context and the specific problem that the model is attempting to predict. To that end, it is also a matter of trusting your subject matter expertise on matters regarding the NFL.

There could be any number of reasons that can explain why the residuals from the regression model are large and not evenly distributed from zero. For example:

Red-zone efficiency: a team that moves the ball downfield with ease, but then struggles to score points once inside the 20-yardline , will accumulate

total_yardsbut fail to producetotal_pointsin the way the model predicts.Turnovers: Similar to above, a team may rack up

total_yardsbut ultimately continue to turn the ball over prior to being able to score.Defensive scoring: a score by a team’s defense, in this model, still counts towards

total_pointsbut does not count towardstotal_yards.Strength of Opponent: At the end of the 2022 season, the Philadelphia Eagles and the New York Jets both allowed just 4.8 yards per play. The model’s predicted values of the other three teams in each respective division (NFC East and AFC East) could be incorrect because such contextual information is not included in the model.

All that to say: residuals are a first glance at the results of the data and provide a broad generalization of how the model performed without taking outside contextual factors into consideration.

Coefficients

The coefficients of the model are the weights assigned to each predictor variable and provide insight into the relationship between the various predictor and response variables, with the provided table outlining the statistical metrics.

-

Estimate - representing the actual coefficient of the model, these numbers are the mathematical relationship between the predictor and response variables. In our case, the estimate for

total_yardsis 0.1034 which means that for each additional one yard gained we can expected a team’stotal_pointsto increase by approximately 0.1034. -

Std. Error - the standard error is the numeric value for the level of uncertainty in the model’s estimate. In general, a lower standard error indicates a more reliable estimate. In other words, if we were to resample the data and then run the regression model again, we could expect the

total_yardscoefficient to vary by approximately 0.0113, on average, for each re-fitting of the model. - t value - The t-statistic value is the measure of how many standard deviations the estimate is from 0. A larger t-value indicates that it is less likely that the model’s coefficient is not equal to 0 by chance.

- Pr(>|t|) - This value is the p-value associated with the hypothesis test for the coefficient. The level of significance for a statistical model is typically 0.05, meaning a value less than this results in a rejection of the null hypothesis and the conclusion that the predictor does have a statistically significant relationship with the response variable. In the case of our regression model, a p-value of 0.00000000036 indicates a highly significant relationship.

Returning to the summary statistics of our analysis of the 2022 season, the residuals have a wide spread and an inconsistent deviation from zero. While the median residual value is the closest to zero at 1.157, it is still a bit too high to safely conclude that the model is making predictions that adequately reflect the actual values. Moreover, both tail ends of the residual values (Min and Max) are a large negative and positive number, respectively, which is a possible indication that the regression model is both over- and underestimating a team’s total_points by statistically significant amount.

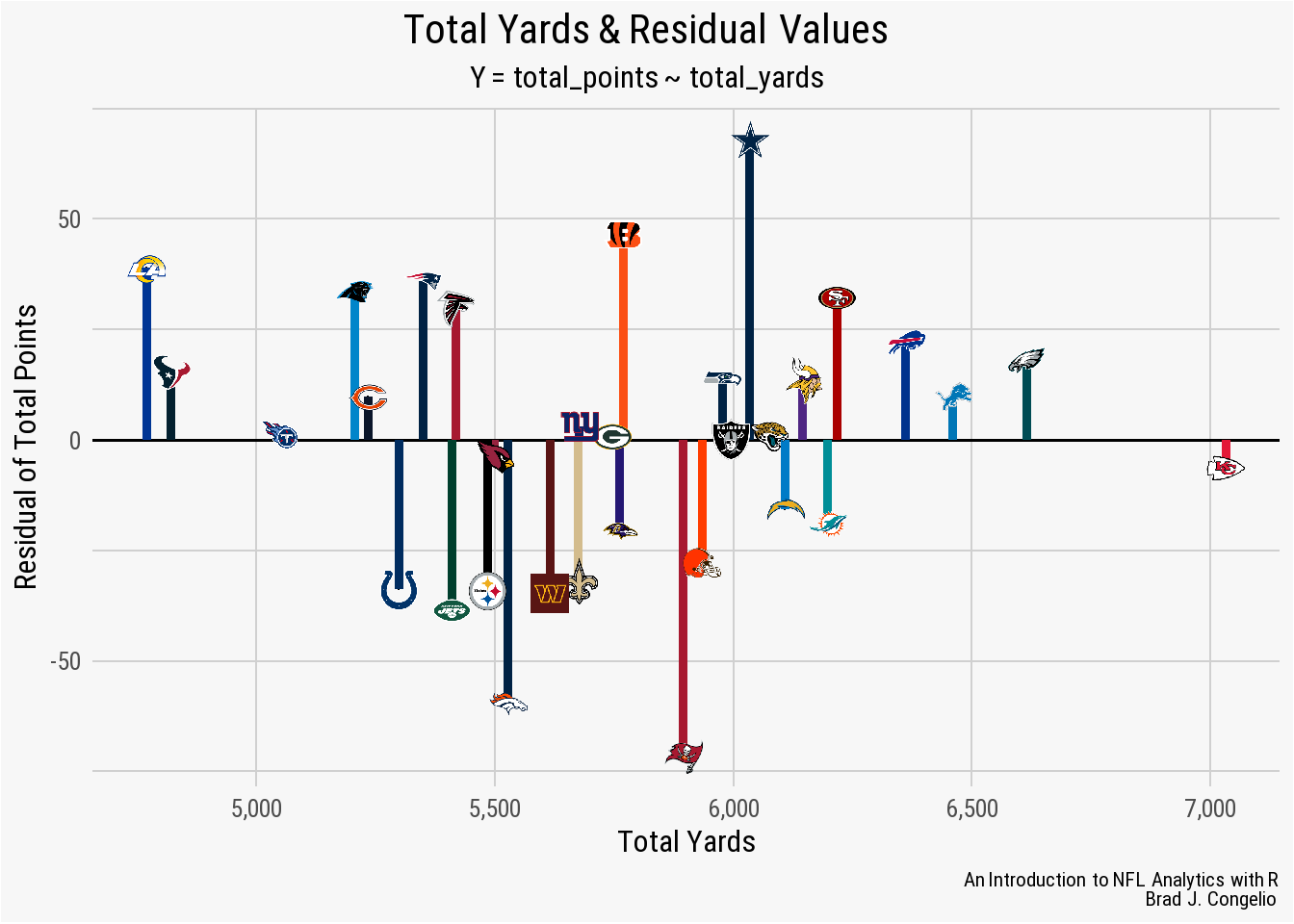

However, as mentioned, this widespread deviation from zero is likely the result of numerous factors outside the model’s purview that occur in any one NFL game. To get a better idea of what the residual values represent, we can plot the data and include NFL team logos.

regression_2022$residuals <- residuals(results_2022)

ggplot(regression_2022, aes(x = total_yards, y = residuals)) +

geom_hline(yintercept = 0, color = "black", linewidth = .7) +

stat_fit_residuals(size = 0.01) +

stat_fit_deviations(size = 1.75, color = regression_2022$team_color) +

geom_image(aes(image = team_logo_wikipedia), asp = 16/9, size = .0325) +

scale_x_continuous(breaks = scales::pretty_breaks(),

labels = scales::comma_format()) +

scale_y_continuous(breaks = scales::pretty_breaks(n = 5)) +

labs(title = "**Total Yards & Residual Values**",

subtitle = "*Y = total_points ~ total_yards*",

caption = "*An Introduction to NFL Analytics with R*<br>

**Brad J. Congelio**") +

xlab("Total Yards") +

ylab("Residual of Total Points") +

nfl_analytics_theme() +

theme(panel.grid.minor.y = element_line(color = "#d0d0d0"))

With the data visualized, it is clear that the model’s Min distribution of -71.44 is associated with the Tampa Bay Buccaneers, while the Max distribution of 68.08 is the prediction for the total points earned by the Dallas Cowboys. Because a negative residual means that the model’s predicted value is too high, and a positive residual means it was too low, we can conclude that the Buccaneers actually scored 71.4 points less than the the results of the model, while the Cowboys scored 68.08 more than predicted.

`Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -225.0352 65.4493 -3.44 0.0017 **

total_yards 0.1034 0.0113 9.14 0.00000000036 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1`˜.’ 0.1 ‘ ’ 1`The (Intercept) of the model, or where the regression line crosses the y-axis, is -225.0350. When working with NFL data, it of course does not make sense that the (Intercept) is negative. Given the model is built on a team’s total yards and total points, it seems intuitive that the regression line would cross the y-axis at the point of (0,0) as an NFL team not gaining any yards is highly unlike to score any points.

It is important to remember that the linear model attempts to position the regression line to come as close to all the individual points as possible. Because of this, it is not uncommon for regression line to not cross exactly where the x-axis and y-axis meet. Again, contextual factors of an NFL game are not accounted for in the model’s data: strength of the opponent’s defense, the quality of special teams play, defensive turnovers and/or touchdowns, field position, etc. can all impact a team’s ability to score points without gaining any yardage. The lack of this information in the data ultimately impacts the positioning of the line of best fit.

The total_yards coefficient represents the slope of the model’s regression line. It is this slope that represents how a team’s total points are predicted to change with every additional gain of one yard. In this example, the total_yards coefficient is 0.10341 - so for every additional yard gained by a team, it is expected to add 0.10341 points to the team’s cumulative amount.

The Std. Error summary statistic provides guidance on the accuracy of the other estimated coefficients. The Std. Error for the model’s (Intercept) is quite large at 65.44927. Given the ability to resample the data from NFL terms numerous times and then allowing the linear model to predict again, this specific Std. Error argues that the regression line will cross the y-axis within 65.44972 of -225.03520 in either direction. Under normal circumstances, a large Std. Error for the (Intercept) would cause concern about the validity of the regression line’s crossing point. However, given the nature of this data - an NFL team cannot score negative points - we should not have any significant concern about the large Std. Error summary statistic for the (Intercept).

At 0.01132, the Std. Error for the total_yards coefficient is small and indicates that the Estimate of total_yards - that is, the increase in points per every yard gained - is quite accurate. Given repeated re-estimating of the data, the relationship between total_yards and total_points would vary by just 0.01132, either positively or negatively.

With a t-value of 9.135, the total_yards coefficient has a significant relationship with total_points. The value of -3.438 indicates that the (Intercept) is statistically different from 0 but we should still largely ignore this relationship given the nature of the data.

The model’s Pr(>|t|) value of highly significant for total_yards and is still quite strong for the (Intercept). The value of 0.00000000036 indicates an incredibly significant relationship between total_yards and total_points.

The linear model’s Residual Standard Error is 32.04, which means that the average predicted values of total_points are 32.04 points different from the actual values in the data. The linear model was able to explain 73.56% of the variance between total_yards and total_points based on the multiple R-squared value of 0.7356. Additionally, the Adjusted R-squared value of 0.7268 is nearly identical to the multiple R2, which is a sign that the linear model is not overfitting (in this case because of the simplicity of the data). The model’s F-Statistic of 83.45 indicates a overall significance to the data, which is backed up by an extremely strong p-value.

Based on the summary statistics, the linear model did an extremely good job at capturing the relationship between a team’s total_yards and total_points. However, with residuals ranging from -71.443 to 68.080, it is likely that the model can be improved upon by adding additional information and statistics. However, before providing additional metrics, we can try to improve the model’s predictions by including all of the data (rather than just the 2022 season). By including 20-seasons worth of total_yards and total_points, we are increasing the sample size which, in theory, allows for a reduced impact of any outliers and an improve generalizability.

Working with 10+ years of play-by-play data can be problematic in that the model, using just total_yards and total_points, is not aware of changes in the overall style of play NFL. The balance between rushing and passing has shifted, there’s been a philosophical shift in the coaching ranks in “going for it” on 4th down, etc. A simple linear regression cannot account for how these shifts impact the data on a season-by-season basis.

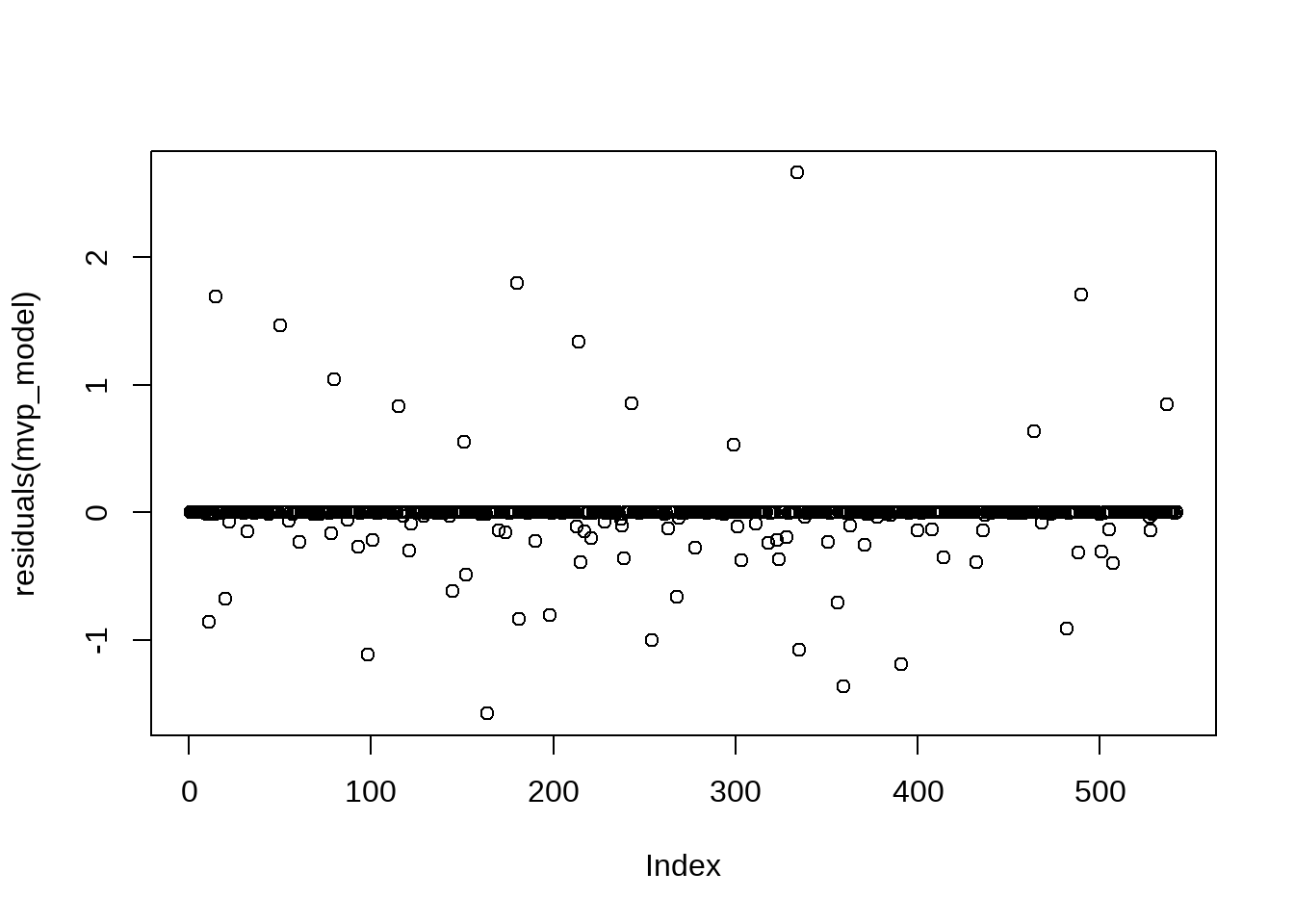

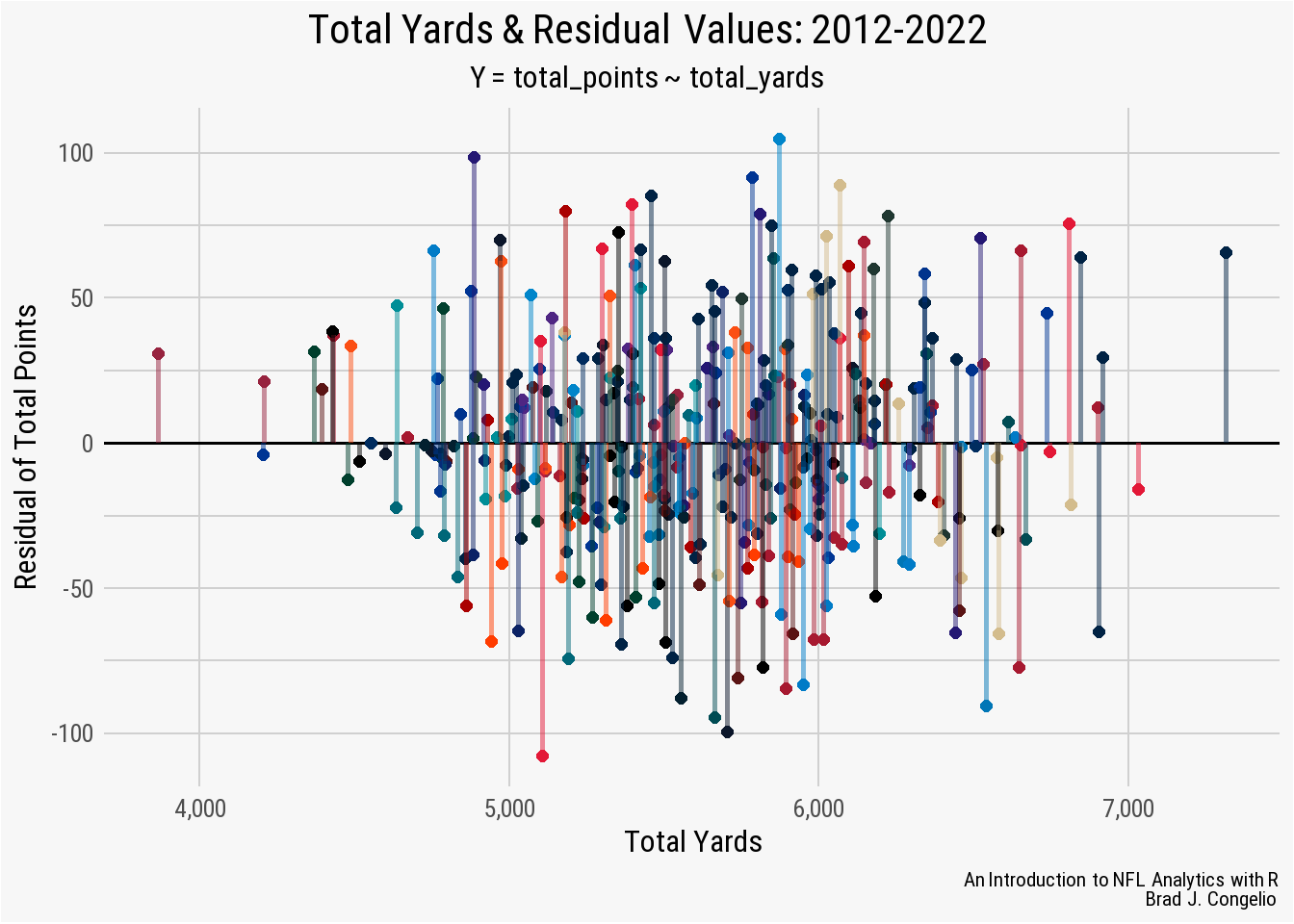

The results from including the total_points and total_yards for each NFL team from 2012-2022 show an improvement of the model, specifically with the residual values.

The residual values after including 20-seasons worth of data are a bit better. The Median is -1.26 which is slightly higher than just one season (M = 1.16). The 1Q and 3Q distributions are both approximately symmetric around the model’s M value compared to just the 2022 season regression that results in a deviation between 1Q and 3Q (-22.33 and 19.15, respectively). The Min and Max values of the new model still indicate longtail cases on both ends of the regression line much like the 2022 model found.

To further examine the residual values, we can use a Shapiro-Wilk Test to test the whether results are normally distributed.

The Shapiro-Wilk Test provides two values with the output: the test statistic (provided as a W score) and the model’s p-value. Scores for W can range between 0 and 1, where results closer to 1 mean the residuals are in a normal distribution. The p-value is used make a decision on the null hypothesis (that there is enough evidence to conclude that there is uneven distribution). In most cases, if the p-value is larger than the regression’s level of significance (typically 0.05), than you may reject the null hypothesis.

We can run the Shapiro-Wilk Test on our 2012-2022 data using the shapiro.test function that is part of the stats package in R.

results_2012_2020 <- residuals(all_season_results)

shapiro_test_result <- shapiro.test(results_2012_2020)

shapiro_test_result

Shapiro-Wilk normality test

data: results_2012_2020

W = 1, p-value = 0.8The W score for the residual is 1, meaning a very strong indication that the data in our model is part of a normal distribution. The p-value is 0.8, which is much larger than the regression’s level of significance (0.05). As a result, we can reject the null hypothesis and again conclude that the data is in a normal distribution.

teams <- nflreadr::load_teams(current = TRUE)

regression_all_seasons <- left_join(regression_all_seasons,

teams, by = c("team" = "team_abbr"))

regression_all_seasons$residuals <- residuals(all_season_results)

ggplot(regression_all_seasons, aes(x = total_yards, y = residuals)) +

geom_hline(yintercept = 0,

color = "black", linewidth = .7) +

stat_fit_residuals(size = 2,

color = regression_all_seasons$team_color) +

stat_fit_deviations(size = 1,

color = regression_all_seasons$team_color, alpha = 0.5) +

scale_x_continuous(breaks = scales::pretty_breaks(),

labels = scales::comma_format()) +

scale_y_continuous(breaks = scales::pretty_breaks(n = 5)) +

labs(title = "**Total Yards & Residual Values: 2012-2022**",

subtitle = "*Y = total_points ~ total_yards*",

caption = "*An Introduction to NFL Analytics with R*<br>

**Brad J. Congelio**") +

xlab("Total Yards") +

ylab("Residual of Total Points") +

nfl_analytics_theme() +

theme(panel.grid.minor.y = element_line(color = "#d0d0d0"))

We can also compare the multiple R2 and adjusted R2 score between the two regression models.

2012 - 2022 Data:

Multiple R-squared: 0.683

Adjusted R-squared: 0.682

2022 Data

Multiple R-squared: 0.736

Adjusted R-squared: 0.727The regression using just the 2022 data results in a slightly better multiple and adjusted R2 score compared to using data from the last twenty seasons of the NFL. While this does indicate that the model based on the single season is better at defining the relationship between a team’s total_yards and total_points it is essential to remember that there is different underlying patterns in the data as a result of the changing culture in the NFL and, ultimately, the epp and flow of team performance as a result of high levels of parity in the league.

In order to account for this “epp and flow” in both team performance and the changing culture/rules of the NFL, we need to turn to a multiple linear regression in order to include these additional factors as it is a model that is capable of better accounting for the nuances of NFL data.

5.2.2 Multiple Linear Regression

A multiple linear regression is extremely similar to a simple linear regression (both in design and implementation in RStudio).The main difference is that a multiple linear regression allows for us to include additional predictor variables by using the + sign in the model’s formula. The inclusion of these additional predictive variables, in theory, allows the model to compute more complex relationships in NFL data and improve on the model’s final performance.

We will again create our first multiple regression linear regression with data from just the 2022 regular season that includes the same predictor (total_yards) and response variable (total_points). For additional predictors, we must consider what circumstances may lead a team to have high total_yards but an amount of total_points that would fall below the model’s predicted value. We will include the following as additional predictors:

- Redzone Efficiency: provided as a percentage, this is a calculation of how many times a team drove into the redzone and scored. A higher percentage is better.

- Redzone Touchdown Efficiency: This is the same as redzone efficiency, but includes only the number of redzone trips divided by the total touchdowns scored from the redzone.

- Redzone Field Goal Efficiency: The same as redzone touchdown efficiency, but with field goals.

- Cumulative Turnovers: The total number of turnovers during the regular season.

- Defensive Touchdowns: The number of touchdowns scored by each team’s defensive unit.

- Special Teams Touchdowns: The number of touchdowns scored by special teams (kick/punt returns).

To begin building the multiple linear regression model for the 2022 season, we can read in the data below using vroom::vroom().

multiple_lm_data <- vroom("http://nfl-book.bradcongelio.com/multiple-lm")

multiple_lm_data <- multiple_lm_data %>%

filter(season == 2022)

teams <- multiple_lm_data$teamThe data for our multiple linear regression has the same four columns as the simple linear regression (season, team, total_points, and total_yards) but also includes the new predictor variables (rz_eff, rz_td_eff, rz_fg_eff, def_td, and spec_tds).

Please note that, of the predictor and response variables, all of the values are in whole number format except for rz_eff, rz_td_eff, and rz_fg_eff. While it is not a problem to include predictors that are on differing scales (in this case, whole numbers and percentages), it may cause difficulty in interpreting the summary statistics. If this is the case, the issue can be resolved by using the scale() function to standardize all the predictors against one another.

The construction of the multiple linear regression model is the same process of the simple regression, with the inclusion of additional predictors to the formula using the + sign. We are also applying a filter() to our multiple_lm_data to retrieve just the 2022 season.

lm_multiple_2022 <- lm(total_points ~ total_yards + rz_eff + rz_td_eff + rz_fg_eff

+ total_to + def_td + spec_tds, data = multiple_lm_data)

summary(lm_multiple_2022)

Call:

lm(formula = total_points ~ total_yards + rz_eff + rz_td_eff +

rz_fg_eff + total_to + def_td + spec_tds, data = multiple_lm_data)

Residuals:

Min 1Q Median 3Q Max

-53.03 -15.58 -0.35 14.60 43.53

Coefficients: (1 not defined because of singularities)

Estimate Std. Error t value Pr(>|t|)

(Intercept) -459.5225 128.5557 -3.57 0.0015 **

total_yards 0.0906 0.0113 8.02 2.2e-08 ***

rz_eff 228.8853 113.5625 2.02 0.0547 .

rz_td_eff 167.2323 82.7097 2.02 0.0540 .

rz_fg_eff NA NA NA NA

total_to 0.4058 1.4934 0.27 0.7881

def_td 4.4560 4.0573 1.10 0.2826

spec_tds 5.4977 7.8674 0.70 0.4911

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 26.9 on 25 degrees of freedom

Multiple R-squared: 0.844, Adjusted R-squared: 0.807

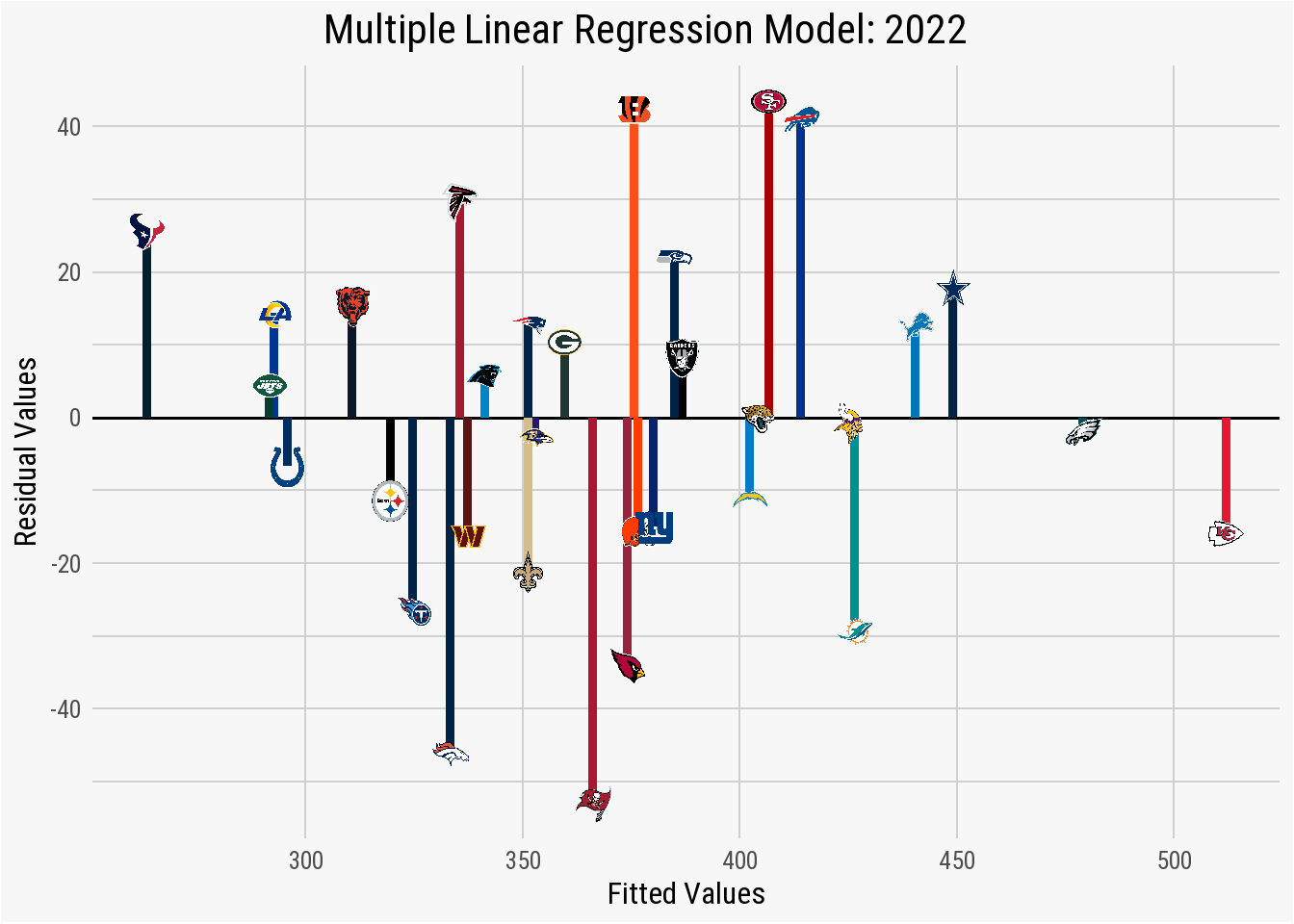

F-statistic: 22.6 on 6 and 25 DF, p-value: 5.81e-09The summary statistic residuals for the multiple linear regression are more evenly distributed towards the mean than our simple linear regression. Based on the residuals, we can conclude that - for 50% of the teams - the model either over or underestimated their total_points by just -0.35 (as listed in the Median residual). The interquartile range (within the 1Q and 3Q quartiles) are both close to the median and the Min and Max residuals both decreased significantly from our simple linear model, indicating a overall better line of fit.

We can confirm that the multiple linear regression resulted in an even distribution of the residuals by again using a Shapiro-Wilk Test.

Shapiro-Wilk normality test

data: results_lm_2022

W = 1, p-value = 0.9The results of the Shapiro-Wilk test (W = 1 and p-value = 0.9) confirm that residuals are indeed evenly distributed. A visualization showcases the model’s even distribution of the residuals.

mlm_2022_fitted <- predict(lm_multiple_2022)

mlm_2022_residuals <- residuals(lm_multiple_2022)

plot_data_2022 <- data.frame(Fitted = mlm_2022_fitted,

Residuals = mlm_2022_residuals)

plot_data_2022 <- plot_data_2022 %>%

cbind(teams)

nfl_teams <- nflreadr::load_teams(current = TRUE)

plot_data_2022 <- plot_data_2022 %>%

left_join(nfl_teams, by = c("teams" = "team_abbr"))

ggplot(plot_data_2022, aes(x = Fitted, y = Residuals)) +

geom_hline(yintercept = 0,

color = "black", linewidth = .7) +

stat_fit_deviations(size = 1.75,

color = plot_data_2022$team_color) +

geom_image(aes(image = team_logo_espn),

asp = 16/9, size = .0325) +

scale_x_continuous(breaks = scales::pretty_breaks(),

labels = scales::comma_format()) +

scale_y_continuous(breaks = scales::pretty_breaks()) +

labs(title = "**Multiple Linear Regression Model: 2022**") +

xlab("Fitted Values") +

ylab("Residual Values") +

nfl_analytics_theme() +

theme(panel.grid.minor.y = element_line(color = "#d0d0d0"))

Just as the residual values in the summary statistics indicated, plotting the fitted_values against the residual_values shows an acceptable spread in the distribution, especially given the nature of NFL data. Despite positive results in the residual values, the summary statistics of the multiple linear regression indicates a significant issue with the data. Within the Coefficients, it is explained that one of the items is “not defined because of singularities.”

“Singularities” occur in the data as a result of the dreaded multicollinearity between two or more predictors. The involved predictors were found to have a high amount of correlation between one another, meaning that one of the variables can be predicted in a near linear fashion with one or more of the other predictive variables. As a result, it is difficult for the regression model to correctly estimate the contribution of these dependent variables to the response variable.

The model’s Coefficients of our multiple linear regression shows NA values for the rz_fg_eff predictor (the percentage of times a team made a field goal in the red zone rather than a touchdown). This is because rz_fg_eff was one of the predictive variables strongly correlated and was the one dropped by the regression model to avoid producing flawed statistics as a result of the multicollinearity.

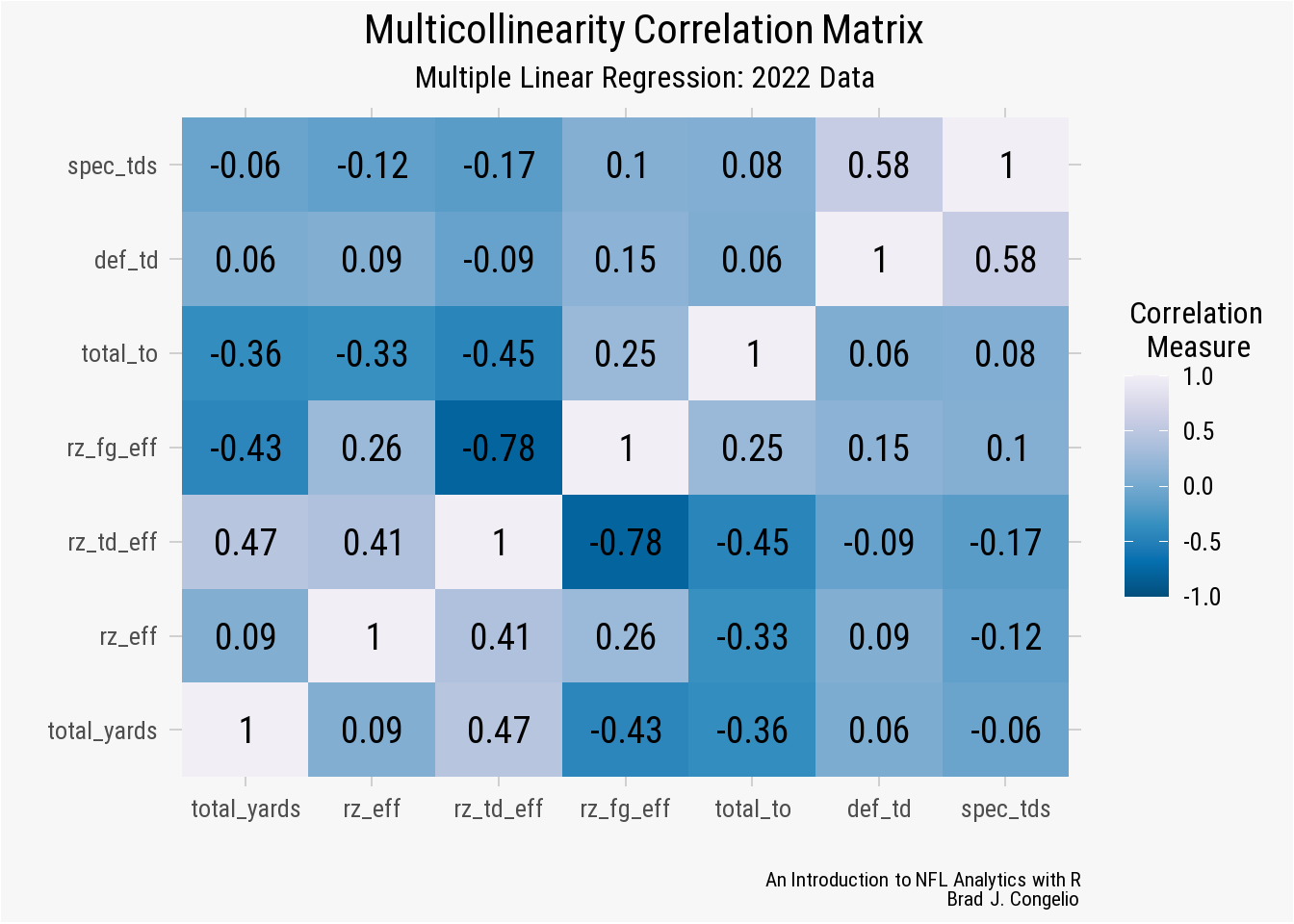

If you are comfortable producing the linear regression with rz_fg_eff being a dropped predictor, there are no issues with that. However, we can create a correlation plot that allows is to determine which predictors have high correlation values with others. Examining the issue allows us to determine if rz_fg_eff is, indeed, the predictive variable we want the regression to drop or if we’d rather, for example, drop rz_eff and keep just the split between touchdowns and field goals.

regression_corr <-

cor(multiple_lm_data[, c("total_yards",

"rz_eff", "rz_td_eff",

"rz_fg_eff", "total_to",

"def_td", "spec_tds")])

melted_regression_corr <- melt(regression_corr)

ggplot(data = melted_regression_corr, aes(x = Var1,

y = Var2,

fill = value)) +

geom_tile() +

scale_fill_distiller(palette = "PuBu",

direction = -1,

limits = c(-1, +1)) +

geom_text(aes(x = Var1, y = Var2, label = round(value, 2)),

color = "black",

fontface = "bold",

family = "Roboto", size = 5) +

labs(title = "Multicollinearity Correlation Matrix",

subtitle = "Multiple Linear Regression: 2022 Data",

caption = "*An Introduction to NFL Analytics with R*<br>

**Brad J. Congelio**") +

nfl_analytics_theme() +

labs(fill = "Correlation \n Measure", x = "", y = "") +

theme(legend.background = element_rect(fill = "#F7F7F7"),

legend.key = element_rect(fill = "#F7F7F7"))

Using a correlation plot allows for easy identification of those predictive variables that have high correlation with one another. The general rule is that two predictors become problematic in the regression model if the coefficient between the two is above 0.7 (or 0.8, given domain knowledge about the context of the data).

In our correlation plot, there are two squares (indicated by the darkest blue color) that have a value greater than 0.7 (or -0.7 in this case, as both strong and negative correlations are capable of producing multicollinearity. The two squares happen to relate to the same relationship between the rz_fg_eff and rz_td_eff predictors.

Recall that the regression model automatically removed the rz_fg_eff from the measured Coefficients. Given the context of the data, I am not sure that is the best decision. Because we are examining the relationship between the predictive variables and total_points, removing the rz_fg_eff variable inherently erases a core source of points in a game of football.

Because of this - and since our rz_eff predictor accounts for both touchdowns and field goals - I believe we can move forward on rerunning the regression without bothrz_fg_eff and rz_td_eff.

To run the multiple linear regression again, without the predictors relating to red zone touchdown and field efficiency, we will drop both from our multiple_lm_2022 data frame, rerun the regression model, and then examine the ensuing summary statistics.

lm_multiple_2022_edit <- multiple_lm_data %>%

select(-rz_td_eff, -rz_fg_eff)

lm_multiple_2022_edit <- lm(total_points ~ total_yards + rz_eff +

total_to + def_td + spec_tds,

data = lm_multiple_2022_edit)

summary(lm_multiple_2022_edit)

Call:

lm(formula = total_points ~ total_yards + rz_eff + total_to +

def_td + spec_tds, data = lm_multiple_2022_edit)

Residuals:

Min 1Q Median 3Q Max

-60.18 -14.83 -3.79 18.99 55.92

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -472.5877 135.8042 -3.48 0.0018 **

total_yards 0.0998 0.0109 9.13 1.4e-09 ***

rz_eff 309.1167 112.5462 2.75 0.0108 *

total_to -0.2760 1.5388 -0.18 0.8591

def_td 3.5808 4.2670 0.84 0.4090

spec_tds 4.9584 8.3167 0.60 0.5562

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 28.5 on 26 degrees of freedom

Multiple R-squared: 0.819, Adjusted R-squared: 0.784

F-statistic: 23.5 on 5 and 26 DF, p-value: 7.03e-09We have certainly simplified the model by removing both rz_td_eff and rz_fg_eff but the impact of this change is a fair trade off to avoid further issues with multicollinearity. Our new adjusted R is still high (0.784), only dropping a bit from the original model that included both predictors (0.807). Both models did well at explaining the amount of variance between the predictors and the response variable. While the F-statistic and the p-value are strong in both models, it is important to note that the Residual standard error dropped from 27 in the original model to 28 in the more simplified version. Given that this value is the average difference between the actual values and the predicted equivalents in the regression, both would ideally be smaller.

With multiple linear regression model producing acceptable results over the course of the 2022 season, we can now see if the results remain stable when produced over the course of the 2012-2022 seasons.

multiple_lm_data_all <- multiple_lm_data %>%

select(-rz_td_eff, -rz_fg_eff, -season)

lm_multiple_all <- lm(total_points ~ total_yards + rz_eff +

total_to + def_td + spec_tds,

data = multiple_lm_data_all)

summary(lm_multiple_all)

Call:

lm(formula = total_points ~ total_yards + rz_eff + total_to +

def_td + spec_tds, data = multiple_lm_data_all)

Residuals:

Min 1Q Median 3Q Max

-60.18 -14.83 -3.79 18.99 55.92

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -472.5877 135.8042 -3.48 0.0018 **

total_yards 0.0998 0.0109 9.13 1.4e-09 ***

rz_eff 309.1167 112.5462 2.75 0.0108 *

total_to -0.2760 1.5388 -0.18 0.8591

def_td 3.5808 4.2670 0.84 0.4090

spec_tds 4.9584 8.3167 0.60 0.5562

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 28.5 on 26 degrees of freedom

Multiple R-squared: 0.819, Adjusted R-squared: 0.784

F-statistic: 23.5 on 5 and 26 DF, p-value: 7.03e-09The results of the multiple linear regression over data from the 2012-2022 indicates a statistically significant relationship between our predictor variables and a team’s total yards. That said, two items are worth further exploration.

- The model’s Residual standard error increased closer to 30, as opposed to the values of 27 and 28 from the models built on a single season of data. This means that the model, on average, is over or underpredicting the actual values by approximately thirty points. To verify that a residual standard error of 30 is not too high, we can evaluate the value against the scale of our data based on the mean and/or median averages of the

total_pointsvariable. As seen below, the model’s RSE as a percentage of the mean is8.1%and its percentage of the median is8.2%. Given that both values are below 10%, it is reasonable to conclude that the value of the model’s residual standard error is statistically small compared to the scale of thetotal_pointsdependent variable.

- The

spec_tdspredictor, which is the total number of special teams touchdowns scored by a team, has ap-valueof 0.61. This high of ap-valueindicates that the amount of special teams touchdowns earned by a team is not a dependable predictor of the team’s total points. Given the rarity of kickoff and punt returns, it is not surprising that the predictor returned a highp-value. If we run the regression again, without thespec_tdspredictive variable, we get results that are nearly identical to the regression model that includes it as a predictor. The only significant difference is a decrease in theF-statisticfrom 398 to 317. Given the small decrease, we will keepspec_tdsin the model.

The final step of our multiple linear regression model is feeding it new data to make predictions on.

To begin, we need to create a new data frame that holds the new predictor variables. For nothing more than fun, let’s grab the highest value from each predictive variable between the 2012-2022 seasons.

This hypothetical team gained a total of 7,317 yards in one season and was incredibly efficient in the red zone, scoring 96% of the time. It also scored nine defensive touchdowns and returned a punt or a kickoff to the house four times. Unfortunately, the offense also turned the ball over a whopping total of 41 times.

We can now pass this information into our existing model using the predict function and it will output the predicted total_points earned by this hypothetical team based on the multiple linear regression model we built with 20 years of NFL data.

new_predictions <- predict(lm_multiple_all, newdata = new_observations)

new_predictionsThe model determined, based on the new predictor variables provided, that this hypothetical team will score a total of 563 points, which is the second-highest cumulative amount scored by a team dating back to the 2012 season (the 2013 Denver Broncos scored 606 total points). In this situation, the hypothetical team has nearly double the turnovers as the 2013 Bronco (41 turnovers to 21). It is reasonable that providing this hypothetical team a lower number of turnovers would result in it becoming the highest scoring team since 2012.

5.2.3 Logistic Regression

Logistic regressions are particularity useful when modeling the relationship between a categorical dependent variable and a given set of predictor variables. While linear models, as covered in the last section, handles data with a continuous dependent variable, logistic regressions are used when the response variable is categorical (whether a team successfully converted a third down, whether a pass was completed, whether a running back fumbled on the play, etc.). In each example, there are only two possible outcomes: “yes” or “no”.

Moreover, a logistic regression model does not model the relationship between the predictors and the response variables in a linear fashion. Instead, logistic regressions use seek to mutate the predictors into a values between 0 and 1. Specifically, the formula for a logistic regression is as follows:

\[ P(Y=1|X) = \frac{1}{1 + e^{-(\beta_0 + \beta_1X_1 + \beta_2X_2 + ... + \beta_nX_n)}} \]

- \(P(Y=1|X)\) in the equation is the likelihood of the event \(Y=1\) taking place given the provided predictor variables (\(X\)).

- The model’s coefficients are represented by \(\beta_0 + \beta_1X_1 + \beta_2\).

- \(X1, X2, ..., Xn\) represent the model’s predictor variables.

- The \(1\) in the formula’s numerator results in the model’s probability values always being between 0 and 1.

- The \(1\) in the denominator is a part of the underlying logistic function and ensures that the value is always greater than or equal to 1.

- Finally, the \(e\) is part of the model’s logarithm (or the constant of 2.71828). In short, this function allows the linear combination to be a positive value.

To better explain the formula, let’s assume we want to predict the probability of any one NFL team winning a game (again, in a binary 1 for winning, or 0 for losing). These binary outcome is represented by the \(Y\). The predictor variables, such as the team’s average points scored, the average points allowed by the opposing defense, home-field advantage, and any other statistics likely to be important in making the prediction are represented by \(X1, X2, ..., Xn\). The model’s coefficients, or the relationship between the predictors and a team’s chance of winning, are represented by \(\beta_0 + \beta_1X_1 + \beta_2\). In the event that a team’s average passing yards is represented by the \(B1\) coefficient, a positive number suggests that higher average passing yards results in an increase in the probability of winning. Conversely, a negative coefficient number indicates the statistic has a negative impact on winning probability.

To that end, there are three distinct types of logistic regressions: binary, multinomial, and ordinal (we will only be covering binary and multinomial in this chapter, however). All three allow for working with various types of dependent variables.

Binary regression models are used when the dependent variable (the outcome) has just two outcomes (typically presented in binary format - 1 or 0). Using the logistic function, a binary regression model determines the probability of the dependent event occurring given the predictor variables. As highlighted below, a binary regression model can be used to predict the probability that a QB is named MVP at the conclusion of the season. The response variable is a binary (1 indicating that the player was named MVP and 0 indicating that the player did not win MVP). Various passing statistics can serve as the predictor variables.

A multinomial regression model is an extension of the binary model in that it allows for the dependent variable to have more than two unordered categories. For example, a multinomial regression model can be used to predict the type of play a team is going to run (run or pass) based on the predictor variables (down and distance, time remaining, score differential, etc.).

An ordinal regression model is used when the dependent variables not only have ordered categories, but the structure and/or order of these categories contains meaningful information. Ordinal regression can be used, for example, to attempt to predict the severity of a player’s injury. The ordered and meaningful categories of the dependent variable can coincide with the severity of the injury itself (mild, moderate, severe, season-ending) while the predictor variables include several other types of categories (the type of contact, the playing surface, what position the player played, how late into the season it occurred, whether the team is coming off a bye week, etc.).

5.2.3.1 Logistic Regression 1: Binary Classification

A binary regression model is used when the dependent variable is in binary format (that is, 1 for “yes” or 0 for “no). This binary represents two - and only two - possible outcomes such as a a team converting on third down or not or if a team won or lost the game. Constructing binary regression models allows use to predict the likelihood of the dependent event occurring based on the provided set of predictor variables.

We will build a binary regression model to predict the probability that an NFL QB will win the MVP at the conclusion of the season.

Let’s first create a data frame, called qb_mvp, that contains the statistics for quarterbacks that we intuitively believe impact the likelihood of a QB being named MVP.

player_stats <- nflreadr::load_player_stats(2000:2022) %>%

filter(season_type == "REG" & position == "QB") %>%

filter(season != 2000 & season != 2005 & season != 2006 & season != 2012) %>%

group_by(season, player_display_name, player_id) %>%

summarize(

total_cmp = sum(completions, na.rm = TRUE),

total_attempts = sum(attempts, na.rm = TRUE),

total_yards = sum(passing_yards + rushing_yards, na.rm = TRUE),

total_tds = sum(passing_tds + rushing_tds, na.rm = TRUE),

total_interceptions = sum(interceptions, na.rm = TRUE),

mean_epa = mean(passing_epa, na.rm = TRUE)) %>%

filter(total_attempts >= 150) %>%

ungroup()We are using data from over the course of 22 NFL seasons (2000 to 2022), but then removing the 2000, 2005, 2006, and 2012 seasons as the MVP for each was not a quarterback (Marshall Faulk, Shaun Alexander, LaDainian Tomlison, and Adrian Peterson, respectively). To begin building the model, we collect QB-specific metric from the load_player_stats() function from nflreadr, including: total completions, total attempts, total yards (passing + rushing), total touchdowns (passing + rushing), and the QB’s average EPA (only for pass attempts).

Because the style of play in the NFL has changed between the earliest seasons in the data frame and the 2022 season, it may not be fair to compare the specific statistics to each other. Rather, we can rank the quarterbacks for each season, per statistic, in a decreasing fashion as the statistical numbers increase. For example, Patrick Mahomes led the league in passing yards in the 2022 season. As a result, he is ranked as 1 in the forthcoming yds_rank column while the QB with the second-most yards (Justin Herbert, with 4,739) will be ranked as 2 in the yds_rank column. This process allows us to normalize the data and takes into the account the change in play style in the NFL over the time span of the data frame. To add the rankings to our data, we will create a new data frame titled qb_mvp_stats from the existing player_stats and then use the order function from base R to provide the rankings in descending order. After, we use the select() function to gather the season, player_display_name, and player_id as well as the rankings that we created using order().

qb_mvp_stats <- player_stats %>%

dplyr::group_by(season) %>%

mutate(cmp_rank = order(order(total_cmp, decreasing = TRUE)),

att_rank = order(order(total_attempts, decreasing = TRUE)),

yds_rank = order(order(total_yards, decreasing = TRUE)),

tds_rank = order(order(total_tds, decreasing = TRUE)),

int_rank = order(order(total_interceptions, decreasing = FALSE)),

epa_rank = order(order(mean_epa, decreasing = TRUE))) %>%

select(season, player_display_name, player_id, cmp_rank, att_rank, yds_rank, tds_rank,

int_rank, epa_rank)The data, as collected from load_player_stats(), does not contain information pertaining to MVP winners. To include this, we can load a prepared file using data from Pro Football Reference. The data contains two variables: season and player_name wherein the name represents the player that won that season’s MVP. After reading the data in, we can use the mutate function to create a new variable called mvp that is in binary (1 representing that the player was the MVP). After, we can merge this data into our qb_mvp_stats data frame. After merging, you will notice that the mvp column has mainly NA values. We must set all the NA values to 0 to indicate that those players did not win the MVP that season.

The above results in a data frame with 723 observations with 6 predictive variables used to determine the probability of the QB being named MVP. We can now turn to the construction of the regression model.

If you have not already done so, please install tidymodels and load it.

install.packages("tidymodels")

library(tidymodels)While we will not be building the binary model with the tidymodels package, we will be utilizing the associated rsample package - which is used to created various types of resamples and classes for analysis - to split our qb_mvp_stats data into both a training and testing set.

Like many of the models to follow in this chapter, it is important to have both a training and testing set of data when performing a binary regression study for three reasons:

- Assess model performance. Having a trained set of data allows us to evaluate the model’s performance on the testing set, allowing us to gather information regarding how we can expect the model to handle new data.

- It allowd us to avoid overfitting. Overfitting is a process that occurs when the regression model recognizes the “statistical noise” in the training data but not necessarily the underlying patterns. When this happens, the model will perform quite well on the training data but will then fail when fed new, unseen data. By splitting the data, we can use the withheld testing data to make sure the model is not “memorizing” the information in the training set.

- Model selection. In the case that you are evaluating several different model types to identify the best performing one, having a testing set allows you to determine which model type is likely to perform best when provided unseen data.

The process of splitting the data into a training and testing set involves three lines of code with the rsample package. Prior to splitting the data, we will create a new data frame titled mvp_model_data that is our existing qb_mvp_stats information after using the ungroup() function to overwrite any prior group_by() that was applied to the data frame. We first use the initial_split function to create a split of the data into a training and testing set. Moreover, we use the strata argument to conduct what is called “stratified sampling.” Because there are very few MVPs in our data compared to those non-MVP players, using the strata argument allows us to create a training and test set with a similar amount of MVPs in each. We then use the training and testing argument to create the data for each from the initial split.

mvp_model_data <- qb_mvp_stats %>%

ungroup()

mvp_model_split <- rsample::initial_split(mvp_model_data, strata = mvp)

mvp_model_train <- rsample::training(mvp_model_split)

mvp_model_test <- rsample::testing(mvp_model_split)With the MVP data split into both a training and testing set, we use the glm package to conduct the regression analysis on the mvp_model_train data frame.

mvp_model <- glm(formula = mvp ~ cmp_rank + att_rank + yds_rank +

tds_rank + int_rank + epa_rank,

data = mvp_model_train, family = binomial)While the model does run, and placing the mvp_model results in the RStudio environment, we receive the following warning message in the Console:

Warning message: glm.fit: fitted probabilities numerically 0 or 1 occurred

As the message indicates, this is not necessarily an error in the modeling process, but an indication that some of the fitted probabilities in the model are fitted numerically to 0 or 1. This most often occurs in binomial regression models when there is nearly perfect separation within the data underpinning the model, meaning there is one or more predictor variables (passing yards, passing touchdowns, etc.) that are able to perfectly classify the outcome (MVP) of the model. This type of “perfect separation” in modeling is usual when working with data that is both limited and “rare” - only one QB per season in our data is able to win the MVP award. We can create a quick histogram of the fitted probabilities wherein multitudes of probabilities close to either 0 or 1 is a good explanation for the warning message.

The histogram clearly shows the separation issue in the model’s data. The majority of the QBs in the data have a 0% to an incredibly slim chance to win the MVP, while the bar for those with a fitted probability from 0.2 to 1.0 are barely visible in the plot. While a warning that “fitted probabilities numerically 0 or 1 occurred” is cause for further examination, we are able to diagnose the issue by using our “domain knowledge” of the data - that is, of course separation is going to occur since only one QB can be MVP in any season.

To verify that the model is predicting reasonable probabilities, we can calculate the predicted values and probabilities on the existing training data.

training_probs <- mvp_model_train %>%

mutate(pred = predict(mvp_model, mvp_model_train,

type = "response")) %>%

group_by(season) %>%

mutate(pred_mvp = as.numeric(pred == max(pred, na.rm = TRUE)),

mvp_prob = pred / sum(pred))With the model now trained, we can take the results of the model and apply it to our withheld mvp_model_test data frame. Using the predict function, we instruct to take the modeled predictions from the trained mvp_model and generate further predictions on the mvp_model_test data frame. Because the type = "response" argument results in a probability between 0 and 1, we use ifelse to create a “cutoff” for the probabilities, where any number above 0.5 will be 1 while any number below will be 0.

With the model now fitted to previously unseen data (the withheld mvp_model_test data frame), we can calculate its accuracy.

accuracy <- sum(test_class_predictions == mvp_model_test$mvp) / nrow(mvp_model_test)

accuracy <- round(accuracy, 2)

print(paste("Accuracy:", accuracy))[1] "Accuracy: 0.98"A model that predicts with 97% accuracy seems great, right?

However, we must keep in mind the significant class imbalance in our data as visualized on the earlier histogram. Roughly 3% of the quarterbacks in the qb_mvp_stats data frame won the MVP award, meaning the remaining 97% of the quarterbacks did not.

Because of this, a model could always predict “not MVP” and, as a result, also have an accuracy of 97%.

While a high accuracy score like the model produced is a promising first sign, it is important to remember that accuracy - as a metric - only gives the broad picture. It does not depict how the model is performing on each individual class (that is, 0 and 1). To get a more granular understanding of the model’s performance, we can turn to metrics such as precision , recall , and the F1 score.

To begin determining the scores for each, we must conduct a bit of data preparation by ensuring that both test_class_predictions and mvp_model_test$mvp are both as.factor(). After, we use the confusionMatrix function from the caret package to build a matrix between the binary class predictions (from test_class_predictions) and the true labels (from mvp_model_test$mvp).

library(caret)

test_class_predictions <- as.factor(test_class_predictions)

mvp_model_test$mvp <- as.factor(mvp_model_test$mvp)

mvp_cm <- confusionMatrix(test_class_predictions, mvp_model_test$mvp)After constructing the confusion matrix, we can calculate the results for precision, recall, and F1.

precision <- mvp_cm$byClass['Pos Pred Value']

recall <- mvp_cm$byClass['Sensitivity']

f1 <- 2 * (precision * recall) / (precision + recall)

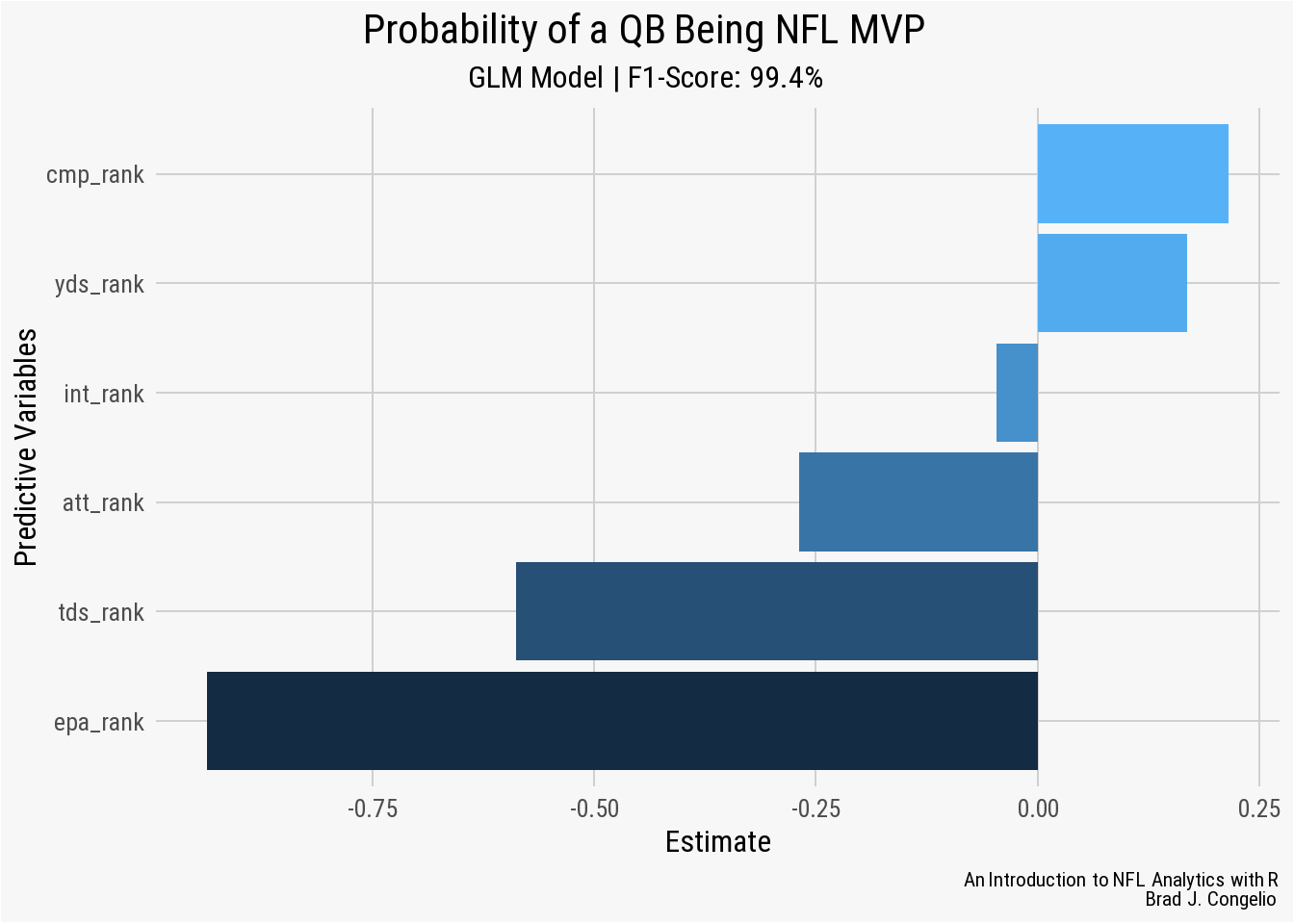

print(paste("Precision:", precision))[1] "Precision: 0.988636363636364"[1] "Recall: 0.994285714285714"[1] "F1 Score: 0.991452991452991"The resulting scores further indicate a well-trained and performing model.

- Precision - the Positive Predictive Value is the fraction of true positives among all positive predictions (including false positives). The resulting score of 0.96 means that the model correctly predicted the MVP 96% of the time.

- Recall - the Sensitivity or True Positive Rate is similar to precision in that it is also the fraction of true positive predictions, but only those among all true positives. Our recall scores of 0.994 means that the model correctly predicted the MVP 99.4% of the time.

- F1 Score - the F1 score is the “harmonic mean” between precision and recall, providing a balanced combination of both. With another score of 0.98, the model’s F1 Score indicates a healthy balance between both precision and recall in its predictions.

The model producing the same score for each metric is indicative that it contains an equal number of both false positives and false negatives, which is ultimately a sign of balance in the prediction making process.

Based on the results of accuracy, precision, recall, and the F1 score, our model is quite accurate. We can now create our own “fictional” data frame with corresponding predictor variables to determine the probabilities for each of the quarterbacks to be named MVP.

new_mvp_data <- data.frame(

cmp_rank = c(1, 4, 2),

att_rank = c(2, 1, 5),

yds_rank = c(5, 3, 7),

tds_rank = c(3, 1, 2),

int_rank = c(21, 17, 14),

epa_rank = c(3, 1, 5))

new_mvp_predictions <- predict(mvp_model,

newdata = new_mvp_data, type = "response")

new_mvp_predictions 1 2 3

0.2413 0.9350 0.0861 According to our fully trained and tested model, fictional quarterback 2 has just over a 90% probability of winning the MVP when compared to the two other opposing quarterbacks.

Finally, we can use the broom package to transform the model’s information into a tidyverse-friendly data frame allowing us to visualize the information using ggplot.

tidy_mvp_model <- broom::tidy(mvp_model) %>%

filter(term != "(Intercept)")

ggplot(tidy_mvp_model, aes(x = reorder(term, estimate),

y = estimate, fill = estimate)) +

geom_col() +

coord_flip() +

labs(x = "Predictive Variables",

y = "Estimate",

title = "**Probability of a QB Being NFL MVP**",

subtitle = "*GLM Model | F1-Score: 99.4%*",

caption = "*An Introduction to NFL Analytics with R*<br>

**Brad J. Congelio**") +

theme(legend.position = "none") +

nfl_analytics_theme()

A negative Estimate for a Predictive Variable means that as that statistic increases (a player has a worse rank among his peers), the probable odds of that quarterback being awarded the Most Value Players decreases. For example, in our model, the epa_rank predictor has an Estimate of approximately -0.8 which means for each drop in rank, a quarterback’s chance of being named MVP decreases by -0.8. Within the model, int_rank, att_rank, yds_rank, tds_ranks, and epa_rank align with our domain knowledge intuition: as quarterbacks perform worse in these areas, their chance of being MVP decreases.

Why, then, does a worse performance in cmp_rank provide a quarterback an increased chance in becoming MVP? Several issues could be causing this, including statistical noise, a variable that is highly correlated with cmp_rank, or a general non-linear relationship between cmp_rank and the response variable. If we were to continue working on this model, a good first step would using the filter() function to remove those quarterbacks with a limited number of attempts during the season.

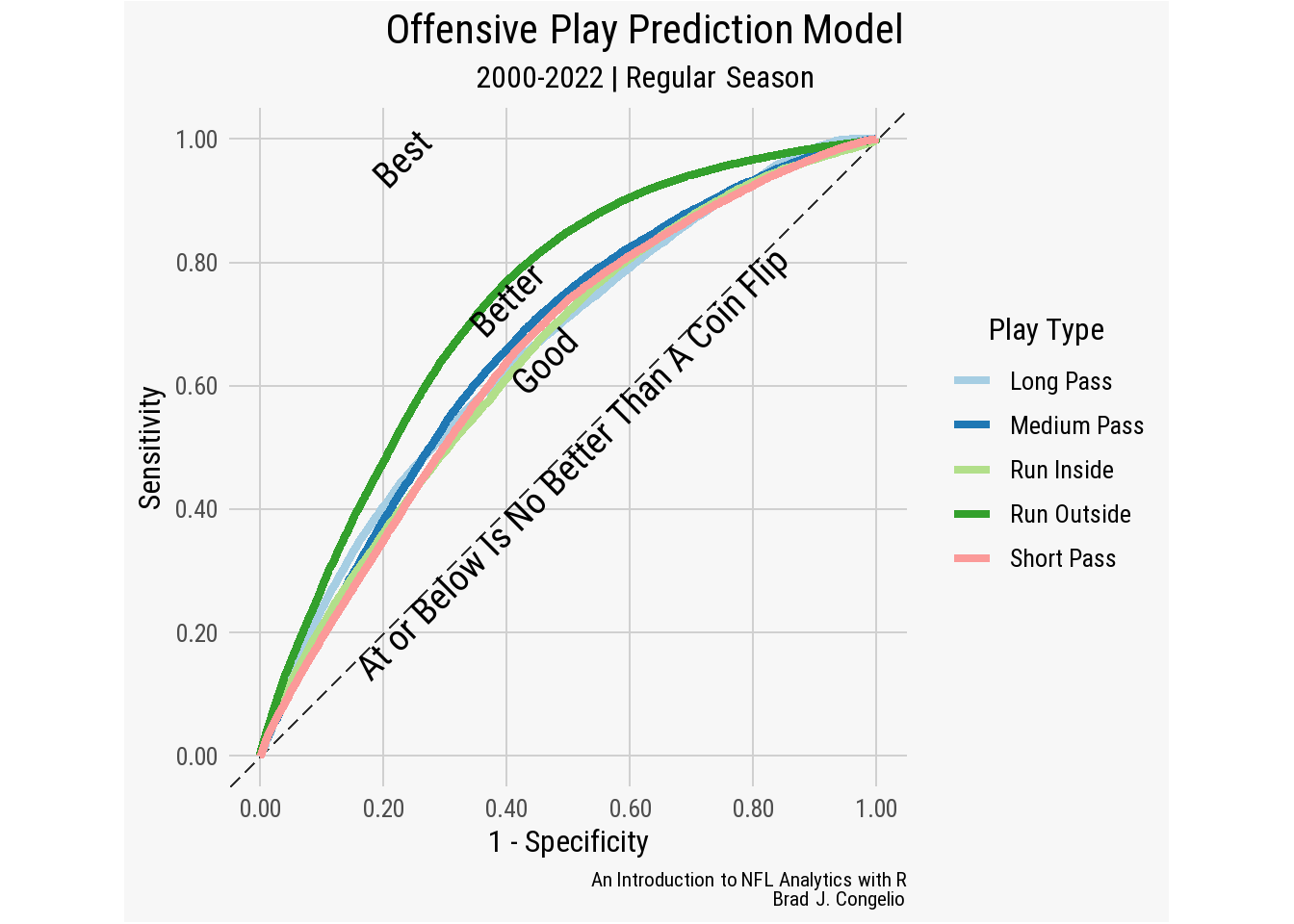

5.2.3.2 Logistic Regression 2: Multinomial Regression with tidymodels

In our previous example building a binomial regression model, there were only two outcomes: 0 and 1 for whether the specific quarterback was named that season’s MVP. A multinomial regression is an extension of the binomial model, but is used when there are multiple categories of outcomes rather than just two. The model itself ultimately determines the probability of each dependent variable based on the given set of predictive values. Using the softmax function to ensure that the probabilities for each dependent variable total 1, the main assumptions of the model are:

- the dependent variable must be categorical with multiple unordered categories

- the predictor variables can be a combination of continuous values or categorical

- much like all the other models we’ve worked on, there must not be multicollinearity among the predictor values

- the observations must be independent of each other

The specific formula for a multinomial regression is as follows:

\[ \log \left( \frac{P(Y = k)}{P(Y = K)} \right) = \beta_{k0} + \beta_{k1} X_1 + \beta_{k2} X_2 + \ldots + \beta_{kp} X_p \]

- \(Y\) is the categorical dependent variable

- In the formula, \(X_{i1}, X_{i2}, ..., X_{ip}\) are the predictive variables

- The coefficients are represented by \(\beta_{k0}, \beta_{k1}, ...,\beta_{kp}\)

- Finally, \(K\) represents the number of categories the dependent variable has

As an example, let’s build a multinomial regression model that predicts the type of offensive play call based on contextual factors of the game. We will build the model around five dependent categories:

- run inside (noted as

middlein the play-by-play data) - run outside (noted as

leftorrightin the play-by-play data) - short pass (passes with air yards under 5 yards)

- medium pass (passes with air yards between 6-10 yards)

- long passes (passes with air yards of 11+ yards)

The construction of a multinomial regression will allow us to find the probability of each play type based on factors in the game. Such a model speaks directly to coach play-calling tendencies given the situation. To begin, we will gather data from the 2010-2022 NFL regular season. After loading the data, we will select the predictor variables and then conduct some featured engineering (which is, in this case, inspired by a presentation given by Thomas Mock on how to use tidymodels to predict a run or pass using binary regression)(Mock, 2020).

An Introduction to the tidymodels package.

As just mentioned, tidymodels is a collection of various packages for statistical modeling and machine learning. Importantly, the tidymodels package is structured much in the same way that tidyverse is - meaning that the packages work together to provide a unified and efficient workflow for constructing, testing, and evaluating models. A total of 43 different model types come in the base installation of tidymodels, ranging from simple linear regressions to more complex approaches such as neural networks and Bayesian analysis. You can view the different model types, housed within the parsnip package, at the tidymodels website: Type of models included in tidymodels.

Because tidymodels follows the tidyverse philosophy of “providing a uniform interface” so that “packages work together naturally,” there is a general flow in which users construct a model.

- First, data preprocessing and feature engineering is done with the

recipespackage. - Next, the

parsnippackage is used to create a model specification. - If any resampling or tuning is being conducted during the model, the

rsamplepackage is used to organize the necessary type of hyperparameter tuning (grid search, regular search, etc.). - The

workflowspackage is then used to combine all of the prior steps into a singular object in your RStudio environment. - After training the model on the initial split of data, the

yardstickpackages allows for various forms of evaluation, including accuracy, precision, recall, F1 score, RMSE, and MAE. - Once you are happy with the training evaluation of the model, the

last_fit()function conducts the process on the entire dataset to generate predictions.

Below, we will take our newly created model_data_clean data frame and conduct a multinomial logistic regression using the tidymodels package.

pbp <- nflreadr::load_pbp(2006:2022) %>%

filter(season_type == "REG")

pbp_prep <- pbp %>%

select(

game_id, game_date, game_seconds_remaining,

week, season, play_type, yards_gained,

ydstogo, down, yardline_100, qtr, posteam,

posteam_score, defteam, defteam_score,

score_differential, shotgun, no_huddle,

posteam_timeouts_remaining, defteam_timeouts_remaining,

wp, penalty, half_seconds_remaining, goal_to_go,

td_prob, fixed_drive, run_location, air_yards) %>%

filter(play_type %in% c("run", "pass"),

penalty == 0, !is.na(down), !is.na(yardline_100)) %>%

mutate(in_red_zone = if_else(yardline_100 <= 20, 1, 0),

in_fg_range = if_else(yardline_100 <= 35, 1, 0),

two_min_drill = if_else(half_seconds_remaining <= 120, 1, 0)) %>%

select(-penalty, -half_seconds_remaining)There is a lot going in on the first bit of code to prepare our multinomial regression. We first collect regular season play-by-play data from 2006 to 2022. It is important to note that the 2006 season was not arbitrarily chosen. Rather, it is the first season in which the air_yards variable is included in the data. After gathering the data, we use the select() function to keep just those variables that may give an indication of what type of pay to expect next. Lastly, we conduct feature engineering by creating three new metrics in the data (in_red_zone, in_fg_range, and two_min_drill) and use if_else() to turn all three into a 1/0 binary.

Before running the next bit of code, be sure that your installed version of dplyr is 1.1.1 or newer.

An issue was discovered in version 1.1.0 that created major computational slowdowns when using case_when() and mutate() together within a group_by() variable. Prior to discovering this issue, running the below code took an absurd 22 minutes.

As a result, you will notice I resorted to using the fcase() function from the data.table() package. You can also use the dt_case_when() function from tidyfast if you prefer to use the same syntax of case_when() but utilize the speed of data.table::ifelse().

After switching to fcase(), the code finished running in 17.69 seconds. After upgrading to dplyr 1.1.1, the case_when() version of the code completed in 18.94 seconds.

model_data <- pbp_prep %>%

group_by(game_id, posteam) %>%

mutate(run_inside = fcase(

play_type == "run" & run_location == "middle", 1,

play_type == "run", 0,

default = 0),

run_outside = fcase(

play_type == "run" & (run_location == "left" |

run_location == "right"), 1,

play_type == "run", 0,

default = 0),

short_pass = fcase(

play_type == "pass" & air_yards <= 5, 1,

play_type == "pass", 0,

default = 0),

medium_pass = fcase(

play_type == "pass" & air_yards > 5 &

air_yards <= 10, 1,

play_type == "pass", 0,

default = 0),

long_pass = fcase(

play_type == "pass" & air_yards > 10, 1,

play_type == "pass", 0,

default = 0),

run = if_else(play_type == "run", 1, 0),

pass = if_else(play_type == "pass", 1, 0),

total_runs = if_else(play_type == "run",

cumsum(run) - 1, cumsum(run)),

total_pass = if_else(play_type == "pass",

cumsum(pass) - 1, cumsum(pass)),

previous_play = if_else(posteam == lag(posteam),

lag(play_type),

"First play of drive"),

previous_play = if_else(is.na(previous_play),

replace_na("First play of drive"),

previous_play)) %>%

ungroup() %>%

mutate(across(c(play_type, season, posteam, defteam,

shotgun, down, qtr, no_huddle,

posteam_timeouts_remaining,

defteam_timeouts_remaining, in_red_zone,

in_fg_range, previous_play, goal_to_go,

two_min_drill), as.factor)) %>%

select(-game_id, -game_date, -week, -play_type,

-yards_gained, -defteam, -run, -pass,

-air_yards, -run_location)We use the mutate() and case_when() functions to create our response variables (run_inside, run_outside, short_pass, medium_pass, long_pass) by providing both the binary 1 and 0 arguments for the play_type. After, we create two more binary columns based on whether the called play was a rush or a pass and then use those two columns to calculate each team’s cumulative runs and passes for each game. We conclude the feature engineering process by using the lag() function to provide the previous play (or, to use “First play of drive” if there was no prior play).

Before moving on to building out the model, we use mutate(across(c()) to turn categorical variables into factors, as well as those numeric variables that are not continuous in nature. Variables that can take on just a limited number of unique values are typically made into a factor. For example, the previous_play variable is categorical and is only capable of being one of two values: run or pass. Because of this, it will be converted into a factor.

Deciding which numeric variables to convert with as.factor() can be a bit more tricky. We can compare the down and half_seconds_remaining variables, as you see the first in the mutate() function but not the second. This is because down is not a continuous variable as it can only take on a specific number of unique values (1, 2, 3, or 4). On the other hand, half_seconds_remaining is not continuous as there is no rhyme or reason to how it appears in the data - or, in other hands, there is no specific amount by which the half_seconds_remaining decreases for each individual play (while there is an ordered way in how down changes).

Because we are focusing on completing a multinomial regression, we must now convert the various play type columns (run_inside, run_outside, etc.) from binary format and then unite all five into a single response variable column titled play_call.

model_data <- model_data %>%

mutate(play_call = case_when(

run_outside == 1 ~ "run_outside",

run_inside == 1 ~ "run_inside",

short_pass == 1 ~ "short_pass",

medium_pass == 1 ~ "medium_pass",

long_pass == 1 ~ "long_pass",

TRUE ~ NA)) %>%

select(-run_outside, -run_inside, -short_pass,

-medium_pass, -long_pass) %>%